Sovereignty, Regulation, and the Power of Azure Confidential Computing

It’s hard to ignore the way the world is impacting our digital strategies these days. We’re seeing more and more discussions around where data lives and who has access to it. Just to put things into perspective, in the last year, data breaches specifically tied to sovereignty issues have jumped by 70%. That really highlights how crucial it is to have solid cloud governance in place.

Think about it: the increasing geopolitical conversations, the worries about keeping data within certain borders (sovereignty), making sure everything is secure and private, not getting stuck with one provider (vendor lock-in), and even the thought of a “kill switch” – it all adds up to a pretty complex situation for anyone working with IT and the cloud. In this article, we’re going to break down these challenges and help you find a clear path forward.

Geopolitics, Sovereignty, and Trust in the Cloud

The rise in geopolitical tensions casts a shadow over where our data resides. Questions around which country’s laws govern your data become increasingly important. This isn’t just a theoretical discussion; it has real implications for compliance and how you protect your information. Data sovereignty – the idea that data is subject to the laws of the nation where it’s collected – is no longer a niche topic. It’s moving to the forefront of decision-making for organizations of all sizes.

To make informed decisions, we need to keep our head cool and understand the specifics of legal frameworks that impact cloud data. A significant piece of legislation in this context is the U.S. Cloud Act (Clarifying Lawful Overseas Use of Data Act). This law mandates that U.S.-based cloud providers must comply with lawful requests for data, regardless of where that data is physically stored. This means that even if your data resides in a datacenter in the Netherlands, a U.S. company like Microsoft could be compelled to provide it under a U.S. legal order. This reality is a key consideration for many organizations, particularly those with strict data sovereignty requirements.

However, it’s crucial to understand that this isn’t solely a U.S. phenomenon. In the Netherlands, for example, we have The Intelligence and Security Services Act 2017 (Wiv 2017), often referred to as the “Sleepwet.” This law grants Dutch intelligence and security services the power to intercept communications on a large scale under certain conditions. Furthermore, the European Union has its own E-Evidence Regulation, which allows judicial authorities in EU member states to directly request digital evidence from service providers in other member states. Additionally, the Dutch Computer Crime Act III provides law enforcement with expanded powers to access computer systems in cases of serious crime.

The reality is that virtually every nation has its own set of laws and regulations that can compel access to data under specific circumstances. Therefore, no geographical location offers absolute immunity. While the physical location of a datacenter matters for latency and potentially for certain local regulations, the nationality of the cloud provider also brings its own jurisdictional considerations. U.S.-based companies operating datacenters globally are indeed subject to both the local laws where the datacenter is situated and to U.S. law, including the Cloud Act.

Security, Privacy, and Lock-In Concerns Remain

Beyond the complexities of international laws and data sovereignty, the fundamental needs for strong security and respect for privacy remain important for anyone using cloud services. You’re entrusting your data, often your most valuable asset, to a third party, and with that comes the expectation that they are doing everything they can to protect it. This isn’t just about preventing external attacks; it’s also about ensuring that your data is handled with the appropriate level of confidentiality within the cloud environment itself. Your customers, in turn, expect their personal information to be treated with the highest standards of privacy and in accordance with regulations like GDPR. Building and maintaining this trust is essential for any successful digital transformation.

Then there’s the very real worry about getting stuck with a particular cloud provider – vendor lock-in. The cloud offers incredible tools and services, but often these are specific to a certain platform. If you build your entire infrastructure and applications using these proprietary technologies, the thought of switching to another provider down the line can feel daunting, if not impossible, due to the potential costs, effort, and technical hurdles involved. This lack of flexibility can impact your long-term strategy and your ability to negotiate terms.

The Unmatched Pace of Innovation in the Public Cloud

Let’s be frank: when we talk about the speed of innovation, the total cost of ownership (TCO), the time to market (TTM) for new services, the ability to rapidly scale resources, and the drive towards modernization, private datacenters simply cannot keep pace with the public cloud. The sheer scale and investment that public cloud providers like Microsoft Azure can bring to bear on these fronts are unparalleled.

Consider the constant flow of new services, the advancements in AI and machine learning, the serverless capabilities that reduce operational overhead, and the cutting-edge security features that are continuously being rolled out. These innovations arrive at a cadence that a private datacenter, with its finite resources and focus, can rarely match. The agility and speed with which you can deploy and experiment with new technologies in Azure are significant advantages in today’s fast-moving digital world. Moreover, the economies of scale inherent in the public cloud often translate to a more favorable TCO over the long term, especially when factoring in the costs of hardware upgrades, maintenance, and staffing.

Addressing Security, Privacy, and Vendor Lock-In

Knowing that private datacenters can’t really compete with the public cloud in terms of innovation, let’s circle back to the topics of sovereignty, security, privacy, and vendor lock-in, and see how Microsoft Azure provides answers and solutions. Understanding these valid concerns around security, privacy, and vendor lock-in, Microsoft Azure has developed a comprehensive approach aimed at providing you with a secure, privacy-respecting, and flexible cloud environment.

Regarding sovereignty and regulatory concerns, Microsoft has introduced the EU Data Boundary to help customers in the European Union keep their data within the EU. Furthermore, technologies like Customer Managed Keys (CMK) give you control over the encryption keys for your data. Azure’s robust encryption at rest and in transit adds another layer of protection, regardless of location. For the most stringent requirements, Azure Sovereign Landing Zones offer a dedicated environment with enhanced controls over data residency, access, and operations.

When it comes to security, Azure’s foundation is built on a commitment to protecting your data. They employ a defense-in-depth strategy, which includes physical security at their globally distributed datacenters, robust identity and access management, advanced threat protection, and comprehensive network security. Azure also provides a wealth of tools and services that empower you to secure your own workloads, such as Microsoft Defender for Cloud and Microsoft Sentinel, offering unified security management and threat intelligence. Their continuous investment in security research and development helps them stay ahead of emerging threats. Microsoft maintains a comprehensive portfolio, including ISO 27001, ISO 27017, SOC 2, and many more industry-specific and regional accreditations. This demonstrates their ongoing effort to meet global security and compliance requirements. Their global network of experts and proactive approach further help to safeguard your workloads.

Regarding privacy, Microsoft’s commitment goes beyond just meeting regulatory requirements like GDPR. They are focused on giving you control over your data. This includes clear policies about data processing, transparency around how your data is handled, and the provision of tools that enable you to meet your own privacy obligations. Features like Customer Lockbox provide you with control over how Microsoft engineers access your data in support scenarios, requiring your explicit approval.

Addressing the concern of vendor lock-in, Microsoft recognizes the importance of flexibility. While Azure offers many platform-specific services that provide significant benefits, they also actively support open standards and interoperability. For instance, their strong support for Kubernetes (through Azure Kubernetes Service - AKS) allows you to orchestrate containerized applications in a way that offers a degree of portability. Furthermore, Azure Arc enables you to extend Azure services and management to other environments, including on-premises and other clouds, providing a more hybrid and multi-cloud approach. While embracing cloud-native services often unlocks the most value, Azure’s strategy acknowledges the need for options and a degree of openness.

So, while the concerns around security, privacy, and lock-in are valid, Microsoft Azure’s platform, services, and strategic direction are designed to provide a secure, privacy-focused, and increasingly flexible cloud experience.

Diving into Confidential Computing

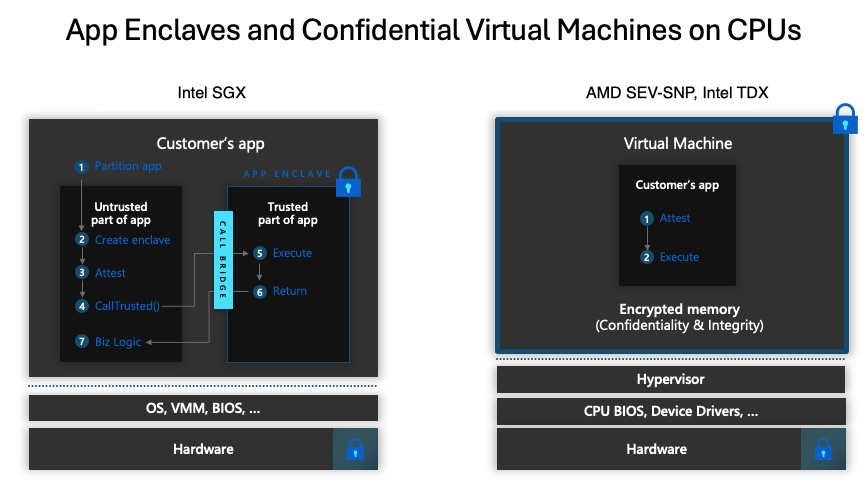

We’ve talked about encryption at rest and in transit, which are crucial for securing your data when it’s stored or being moved. But what about when your data is actively being processed in the cloud? This is where Confidential Computing comes into play.

Under the hood, Confidential Computing leverages hardware-based Trusted Execution Environments (TEEs) to create isolated and protected memory regions where sensitive data can be processed. These TEEs, often referred to as secure enclaves, are cryptographically isolated from the rest of the system, including the operating system, the hypervisor, other applications, and even the cloud provider itself. The processor’s hardware enforces this isolation, ensuring that only authorized code running within the enclave can access the data. When data enters the enclave, it can be decrypted, processed, and then encrypted again before leaving, all within this protected space.

Think of it like this: imagine you have a highly sensitive document. Encryption at rest is like storing it in a locked safe. Encryption in transit is like transporting it in an armored vehicle. Confidential Computing is like having a secure, soundproof room where the document can be read and worked on, and no one outside that room can see or hear what’s happening inside, even if they are in the same building.

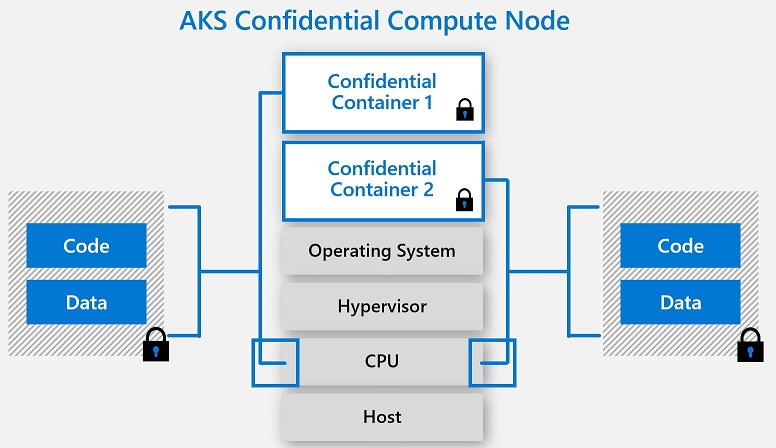

AKS Confidential Compute: Securing Your Containerized Workloads

Within the Azure ecosystem, AKS Confidential Compute brings these powerful protections to your containerized applications. By deploying your AKS nodes on confidential virtual machines (VMs), you can run your containers within these hardware-backed TEEs. This means that the code and data within your confidential containers benefit from the same level of isolation and memory encryption.

When you deploy a confidential container in AKS, the underlying confidential VM ensures that the entire node, and therefore the containers running on it, operate within a secure environment. This extends the security perimeter down to the hardware level, offering a significantly enhanced level of protection for your most sensitive containerized workloads.

The Benefits of Confidential Computing

The adoption of Confidential Computing, particularly in platforms like AKS, unlocks compelling benefits:

- By encrypting data in use, Confidential Computing drastically reduces the attack surface. Even if there’s a compromise at the OS or hypervisor level, the data being actively processed within the secure enclave remains protected. This is a game-changer for scenarios where regulatory compliance or the sensitivity of the data demands the highest levels of privacy.

- For organizations hesitant to move highly sensitive workloads to the public cloud due to trust concerns, Confidential Computing offers a powerful solution. The hardware-backed guarantees of data confidentiality can overcome these hesitations, enabling broader cloud adoption for regulated industries and those handling critical intellectual property.

- Confidential Computing facilitates secure collaboration where multiple organizations can contribute sensitive data to a shared computation without revealing the raw data to each other. This opens up possibilities for privacy-preserving analytics, federated learning, and secure data sharing across organizational boundaries.

- While external threats are often the focus, Confidential Computing also helps to mitigate insider threats. Even privileged users within the cloud provider’s infrastructure have no access to the data being processed within the secure enclaves.

- Industries like finance, healthcare, and government face increasingly strict regulations regarding data privacy and security. Confidential Computing can be a critical technology in meeting these requirements, providing a demonstrable layer of protection for sensitive data.

- The enhanced security and privacy offered by Confidential Computing can unlock entirely new use cases for the cloud, such as secure blockchain applications, privacy-preserving AI, and confidential data analytics.

Confidential Computing represents a significant evolution in cloud security, moving beyond protecting data at rest and in transit to safeguarding it during its most vulnerable state: when it’s being actively used. Within AKS, this translates to a powerful capability to protect your most sensitive containerized applications with hardware-enforced guarantees of confidentiality.

Hands-On with AKS Confidential Compute

Now, it’s time to experience the enhanced security of Confidential Compute in Azure Kubernetes Service. This guide walks you through setting up an AKS cluster with Confidential Compute nodes, both for new deployments and adding to existing ones, with the option to use Customer Managed Keys (CMK) for disk encryption.

⚠️ Note: This guide is for demonstration purposes and the covered configuration choices do not adhere to best practices for production scenarios.

Prerequisites

Before diving into the next steps, ensure you have the following prerequisites in place:

- Azure Subscription with Owner Permissions: You’ll need an active Azure subscription with Owner permissions to create and manage resources in Azure.

- Azure CLI: Install Azure CLI (version 2.57.0 or later) for interacting with Azure services from the command line. You can install Azure CLI by following the instructions at Microsoft’s official documentation.

- Kubectl and Kubectl Login: Ensure

kubectlis installed no your system, along with kubelogin to log in to Azure Kubernetes Service (AKS) using Entra ID credentials. You can install kubectl and kubelogin by following the instructions in this documentation. - AKS-Preview CLI extension: Ensure the

aks-previewCLI extension is installed and updated.

Scenario 1: Greenfield Deployment

This scenario is for when you are setting up a brand new AKS cluster with Confidential Compute capabilities.

Step 1: Open your terminal and log in, using the az login command. After, set the correct subscription, by using the command below:

az account set --subscription "<your-subscription-id>"Step 2: First, create a resource group to organize our resources:

az group create --name azurebeastConfidentialRG --location westeuropeStep 3: If you want to use Customer Managed Keys for the managed disks of your Confidential Compute nodes, run the commands below:

az keyvault create --resource-group azurebeastConfidentialRG --name azurebeastConfidentialKV --location westeurope --enable-purge-protection true

az keyvault key create --vault-name azurebeastConfidentialKV --name azurebeastDiskEncryptionKey --size 2048 --protection softwareYou’ll need a Disk Encryption Set linked to your Key Vault key. You’ll also need to grant the AKS cluster’s managed identity permissions to access the Key Vault. We’ll assume you’re using the system-assigned managed identity here.

First, get the principal ID of the AKS cluster’s system-assigned managed identity after creating the cluster (in the next step). Alternatively, you can create a user-assigned managed identity. For simplicity in this greenfield scenario, we’ll proceed assuming system-assigned.

Step 4 : Create the AKS cluster first, by running the command below:

az aks create --resource-group azurebeastConfidentialRG --name azurebeastConfidentialAKS --node-count 1 --enable-addons monitoring --generate-ssh-keys --enable-confcom --node-vm-size Standard_DCsv2 --assign-identityStep 5: Now, get the principal ID using the following command:

AKS_OBJECT_ID=$(az aks show --resource-group azurebeastConfidentialRG --name azurebeastConfidentialAKS --query "identityProfile.principalId" -o tsv)Step 6: Next, create the Disk Encryption Set and grant permissions using the commands below:

KEY_VAULT_ID=$(az keyvault show --resource-group azurebeastConfidentialRG --name azurebeastConfidentialKV --query id -o tsv)

KEY_NAME=azurebeastDiskEncryptionKey

KEY_VERSION=$(az keyvault key show --vault-name azurebeastConfidentialKV --name $KEY_NAME --query key.kid -o tsv | cut -d'/' -f 5)

az disk-encryption-set create --resource-group azurebeastConfidentialRG --name azurebeastDiskEncryptionSet --location westeurope --source-vault $KEY_VAULT_ID --key-name $KEY_NAME --key-version $KEY_VERSION

DES_ID=$(az disk-encryption-set show --resource-group azurebeastConfidentialRG --name azurebeastDiskEncryptionSet --query id -o tsv)

az keyvault set-policy --name azurebeastConfidentialKV --resource-group azurebeastConfidentialRG --object-id $AKS_OBJECT_ID --permissions keys get wrapkey unwrap

az disk-encryption-set update --name azurebeastDiskEncryptionSet --resource-group azurebeastConfidentialRG --identity-type SystemAssigned

az role assignment create --assignee $AKS_OBJECT_ID --role "Contributor" --scope $DES_IDStep 7: Now add a confidential node pool with CMK enabled, by running the command below:

az aks nodepool add --resource-group azurebeastConfidentialRG --cluster-name azurebeastConfidentialAKS --name confcompool --node-count 1 --enable-confcom --node-vm-size Standard_DCsv2 --enable-disk-encryption-set $DES_IDStep 8: Lastly, run the command below to interact with your cluster:

az aks get-credentials --resource-group azurebeastConfidentialRG --name azurebeastConfidentialAKSCongratulations, you’ve just created a new cluster with confidential compute nodes. If you don’t want CMK, just create the cluster or node pool with --enable-confcom and the VM size.

Scenario 2: Brownfield Deployment

This scenario is for when you have an existing AKS cluster and want to add a node pool with Confidential Compute nodes. We’ll also configure CMK for this new node pool’s disks.

Step 1: Open your terminal and log in, using the az login command. After, set the correct subscription, by using the command below:

az account set --subscription "<your-subscription-id>"Step 2: If you want to use Customer Managed Keys for the managed disks of your Confidential Compute nodes, run the commands below:

az keyvault create --resource-group yourExistingResourceGroup --name azurebeastConfidentialKV --location westeurope --enable-purge-protection true

az keyvault key create --vault-name azurebeastConfidentialKV --name azurebeastDiskEncryptionKey --size 2048 --protection softwareYou’ll need a Disk Encryption Set linked to your Key Vault key. You’ll also need to grant the AKS cluster’s managed identity permissions to access the Key Vault. We’ll assume you’re using the system-assigned managed identity here.

Get the principal ID of the AKS cluster’s system-assigned managed identity (in the next step). Alternatively, you can create a user-assigned managed identity. For simplicity in this scenario, we’ll proceed assuming system-assigned.

Step 3: Get the principal ID using the following command:

EXISTING_RG="yourExistingResourceGroup"

EXISTING_AKS="yourExistingAKSClusterName"

AKS_OBJECT_ID=$(az aks show --resource-group $EXISTING_RG --name $EXISTING_AKS --query "identityProfile.principalId" -o tsv)Step 4: Next, create the Disk Encryption Set and grant permissions using the commands below:

KEY_VAULT_ID=$(az keyvault show --resource-group yourExistingResourceGroup --name azurebeastConfidentialKV --query id -o tsv)

KEY_NAME=azurebeastDiskEncryptionKey

KEY_VERSION=$(az keyvault key show --vault-name azurebeastConfidentialKV --name $KEY_NAME --query key.kid -o tsv | cut -d'/' -f 5)

az disk-encryption-set create --resource-group yourExistingResourceGroup --name azurebeastDiskEncryptionSet --location westeurope --source-vault $KEY_VAULT_ID --key-name $KEY_NAME --key-version $KEY_VERSION

DES_ID=$(az disk-encryption-set show --resource-group yourExistingResourceGroup --name azurebeastDiskEncryptionSet --query id -o tsv)

az keyvault set-policy --name azurebeastConfidentialKV --resource-group yourExistingResourceGroup --object-id $AKS_OBJECT_ID --permissions keys get wrapkey unwrap

az disk-encryption-set update --name azurebeastDiskEncryptionSet --resource-group yourExistingResourceGroup --identity-type SystemAssigned

az role assignment create --assignee $AKS_OBJECT_ID --role "Contributor" --scope $DES_IDStep 5: Use the az aks nodepool add command, specifying the --enable-confcom parameter and the desired VM size. To use CMK, include the --enable-disk-encryption-set parameter.

az aks nodepool add --resource-group $EXISTING_RG --cluster-name $EXISTING_AKS --name confcompool --node-count 1 --enable-confcom --node-vm-size Standard_DCsv2 --enable-disk-encryption-set $DES_IDStep 6: Lastly, run the command below to interact with your cluster:

az aks get-credentials --resource-group $EXISTING_RG --name $EXISTING_AKSCongratulations, you’ve just created confidential compute nodes in your existing cluster.

Verifying Confidential Nodes and CMK

In both scenarios, you can verify the Confidential Compute nodes using kubectl get nodes --show-labels and looking for labels related to SGX. If you configured CMK, you can verify that the disks are encrypted with your key by inspecting the Disk Encryption Set status and the properties of the virtual machine scale set associated with your node pool.

Closing Words

The world of cloud computing is increasingly shaped by geopolitical forces, regulatory demands, and the ever-present need for robust security and privacy. While concerns around data sovereignty, security, and vendor lock-in are valid, the innovation engine of the public cloud, particularly Microsoft Azure, continues to offer unmatched opportunities for growth and modernization.

Technologies like Confidential Computing represent a significant leap forward in addressing critical security and privacy requirements, allowing you to protect your most sensitive data even while it’s being processed in the cloud. Coupled with Azure’s commitment to compliance, its EU Data Boundary initiative, and customer-centric security features, the platform provides a strong foundation for navigating these complex times.

To learn more about Azure Confidential Compute and how it can benefit your specific scenarios, I recommend exploring the following resources:

Thank you for taking the time to go through this post and making it to the end. Stay tuned, because we’ll keep continuing providing more content on topics like this in the future.

Author: Rolf Schutten

Posted on: April 17, 2025