Simplifying Microservices Communication with Service Mesh on Azure

Managing modern applications has become increasingly complex as organizations transition from monolithic systems to distributed microservices architectures. This shift has brought tremendous scalability and flexibility, but it has also introduced new challenges in ensuring reliable, secure, and efficient communication between services.

In this blog, we’ll explore Service Mesh, a solution specifically designed to tackle these challenges. You’ll learn what a Service Mesh is, why it’s a game-changer for managing microservices communication, and how it integrates seamlessly with Azure Kubernetes Service (AKS). We’ll also provide a step-by-step guide to set up and demo a Service Mesh, so you can see it in action.

What is a Service Mesh?

A Service Mesh is a dedicated infrastructure layer designed to manage and optimize communication between microservices within a distributed application. It acts as a network of lightweight proxies that oversee how services interact, enabling secure, reliable, and observable communication without requiring significant changes to the application code.

In simpler terms, a Service Mesh is like a traffic control system for microservices. It ensures that requests are routed efficiently, securely, and with full visibility, handling tasks like retries, load balancing, and encryption. This allows developers to focus on building features, while operational complexities such as service discovery and traffic management are handled transparently by the mesh.

The Sidecar Proxy Model

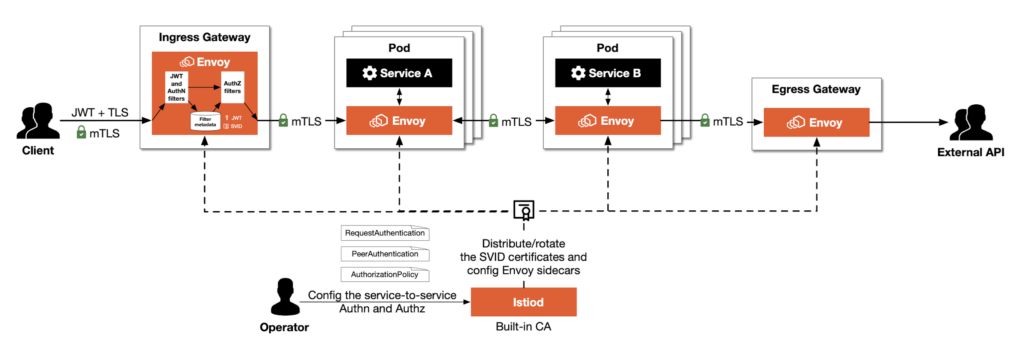

At the core of a Service Mesh is the sidecar proxy model. Instead of microservices communicating directly with each other, each service is paired with a small, independent proxy (known as a sidecar) that runs alongside it. These sidecars handle all communication on behalf of the microservice, intercepting requests and responses to provide features like:

- Routing and Traffic Control: Determining the best destination for each request.

- Security: Enforcing mutual TLS (mTLS) for encrypted communication.

- Observability: Capturing metrics, logs, and traces for performance monitoring and debugging. For example, when Service A needs to talk to Service B, it doesn’t contact Service B directly. Instead, Service A sends its request to its own sidecar proxy. This proxy then consults the mesh’s rules to determine how and where to send the request, ensuring all communication adheres to predefined policies.

Key Components of a Service Mesh

- Sidecar Proxies: Sidecar proxies form the backbone of a Service Mesh. These lightweight agents are typically based on powerful tools like Envoy, which is widely used in solutions like Istio and Consul Connect. Sidecar proxies are deployed alongside each microservice, taking on the responsibility for all incoming and outgoing network traffic.

- Control Plane vs. Data Plane: The functionality of a Service Mesh is divided into two main layers:

- Data Plane: This is where the actual data flow happens. It consists of the sidecar proxies that intercept and process network traffic between microservices. The data plane ensures traffic is routed efficiently, securely, and with complete observability.

- Control Plane: The control plane acts as the brain of the Service Mesh. It is responsible for managing configuration, distributing traffic policies, and coordinating communication across the mesh. Examples of control plane tools include Istio’s Pilot or Linkerd’s Controller. In this architecture, the control plane and data plane work together to abstract away the complexities of service-to-service communication, allowing teams to implement advanced traffic management and security policies with ease.

With these components, a Service Mesh provides a robust foundation for building scalable, secure, and maintainable microservices architectures.

Popular Service Mesh Solutions

The Service Mesh ecosystem is rich with solutions tailored to diverse operational needs. While all Service Mesh technologies share common goals—improving service-to-service communication, enhancing security, and providing observability—they differ in their implementation, ecosystem integration, and complexity.

Here’s a high-level comparison of the most popular Service Mesh solutions:

Istio

- Features: Advanced traffic management, mTLS for secure communication, and rich telemetry capabilities.

- Complexity: High; requires effort to configure and maintain, but offers a powerful feature set.

- Use Case: Ideal for enterprises needing robust policy enforcement and deep observability.

- Adoption: A popular choice for Kubernetes-based environments, supported by Envoy as its data plane.

Linkerd

- Features: Lightweight and designed for simplicity, with a focus on fast performance and ease of use.

- Complexity: Low compared to Istio, making it suitable for teams new to Service Mesh.

- Use Case: Quick adoption in small to medium-sized microservices architectures.

- Strength: Minimalistic approach with built-in Kubernetes integration.

HashiCorp Consul Connect

- Features: Seamless integration with HashiCorp’s ecosystem, including Terraform and Vault.

- Complexity: Moderate; offers service discovery, secure service-to-service communication, and configuration via Consul KV store.

- Use Case: Organizations already leveraging HashiCorp tools for infrastructure and security.

- Strength: Tight integration with multi-cloud environments and non-Kubernetes workloads.

High-Level Common Features

While these solutions differ in approach and complexity, they share several foundational capabilities:

- Traffic Management: Intelligent routing, retries, and load balancing.

- Security: End-to-end encryption with mTLS.

- Observability: Metrics, logs, and distributed tracing for monitoring.

- Service Discovery: Automatic discovery of services within the mesh.

Azure Integration

Azure Kubernetes Service (AKS) is a managed Kubernetes offering that supports deploying and managing Service Mesh solutions seamlessly. Here’s how these Service Meshes integrate with AKS:

Istio on AKS

- Compatibility: Fully supported, with Azure Marketplace templates available for quick setup.

- Usage: Ideal for organizations requiring enterprise-grade features and support.

Linkerd on AKS

- Compatibility: Works well in AKS environments with minimal setup.

- Usage: A great choice for smaller teams prioritizing simplicity.

Consul Connect on AKS

- Compatibility: Can be deployed on AKS with HashiCorp Consul Helm charts or Terraform scripts.

- Usage: Best suited for teams leveraging HashiCorp’s ecosystem or hybrid workloads.

Azure’s support for Kubernetes-native tools, combined with its integration with Azure Monitor and Azure Policy, makes it an excellent platform for running Service Mesh solutions. Whether you prioritize simplicity or advanced features, AKS provides the scalability and flexibility to deploy your preferred Service Mesh effectively.

Why Use a Service Mesh?

The adoption of Service Meshes is growing rapidly in distributed microservices architectures due to the significant advantages they bring in managing inter-service communication. However, they are not a one-size-fits-all solution, and their value depends heavily on the specific challenges and scale of your application.

Enhanced Security: mTLS Encryption

A Service Mesh provides built-in security features, such as mutual TLS (mTLS), to encrypt service-to-service communication. This ensures:

- Confidentiality: Communication between services is encrypted, preventing unauthorized access.

- Integrity: Messages cannot be tampered with during transit.

- Authentication: Services verify each other’s identities before exchanging data. Without a Service Mesh, implementing mTLS at scale can be labor-intensive, requiring custom development and configuration. The mesh simplifies this by automating certificate management and rotation.

Observability: Metrics, Logs, and Distributed Tracing

Monitoring microservices can be daunting due to their distributed nature. A Service Mesh provides out-of-the-box observability features, enabling developers and operators to:

- Collect detailed metrics (e.g., latency, throughput, error rates) for every service.

- Aggregate and analyze logs to debug issues efficiently.

- Perform distributed tracing to visualize the flow of requests across multiple services, pinpointing bottlenecks or failures. By consolidating these capabilities into a single platform, Service Meshes reduce the need for additional instrumentation or manual logging.

Traffic Control: Load Balancing, Retries, and Circuit Breaking

Managing traffic between microservices is critical to ensure high availability and reliability. A Service Mesh automates:

- Load Balancing: Efficiently distributing requests across service instances.

- Retries: Automatically re-sending failed requests to handle transient issues.

- Circuit Breaking: Preventing cascading failures by isolating services that are unresponsive or overloaded. These mechanisms not only enhance the user experience but also provide resilience to your application under varying loads and conditions.

Service Discovery and Fault Tolerance

Service Meshes streamline service discovery by automatically detecting services as they are deployed and updating routing rules accordingly. This ensures:

- Seamless scaling: New instances are automatically added to the routing table.

- Fault tolerance: Requests can be rerouted to healthy instances when failures occur. Without a Service Mesh, implementing these capabilities typically requires custom configuration with service registries or DNS.

Use Cases for a Service Mesh

While a Service Mesh can be a game-changer, it’s not suitable for every scenario. Here are situations where it adds the most value:

- Large-Scale Microservices Architectures: When managing dozens or hundreds of microservices, a Service Mesh simplifies communication, enhances security, and provides a unified observability layer.

- Strict Security Requirements: Industries like finance and healthcare often require encrypted communication and zero-trust architectures, both of which are easily implemented with a Service Mesh.

- Frequent Deployment and Scaling: Teams practicing continuous integration/continuous deployment (CI/CD) or scaling services dynamically benefit from the Service Mesh’s automated service discovery and traffic routing.

- Multi-Cluster or Multi-Cloud Deployments: A Service Mesh is ideal for organizations running services across multiple Kubernetes clusters or cloud providers, ensuring consistent communication and policies across environments.

Potential Drawbacks of a Service Mesh

Despite its benefits, a Service Mesh introduces certain trade-offs that should be considered:

- Added Complexity: Service Meshes require additional components, such as sidecar proxies and a control plane, which need to be deployed, configured, and maintained. They introduce new abstractions that teams must learn, which can lengthen onboarding time and increase operational complexity.

- Resource Overhead: The sidecar proxies consume CPU and memory resources, which can be significant in resource-constrained environments. For smaller applications, this overhead might outweigh the benefits, making a Service Mesh less appealing.

- Operational Burden: Managing and upgrading a Service Mesh can be challenging, especially in large-scale deployments. Misconfiguration can lead to unexpected issues, such as degraded performance or security vulnerabilities.

When to Avoid a Service Mesh

A Service Mesh may not be necessary if you have a monolithic application or a small number of microservices, your architecture doesn’t require advanced traffic management or security features, or if your team lacks the bandwidth or expertise to manage the additional complexity.

A Service Mesh can be transformative for modern, distributed systems, providing robust features like enhanced security, observability, and traffic control. However, its adoption should be guided by a clear understanding of its trade-offs. By carefully evaluating your architecture and operational needs, you can determine whether a Service Mesh is the right fit for your application.

Setting Up a Service Mesh on Azure Kubernetes Service (AKS)

In this step-by-step walkthrough, we’ll create an AKS cluster that supports the Istio-based Service Mesh add-on, and configure the Service Mesh add-on, which simplifies the deployment of Istio in your cluster. We’ll then deploy a sample microservices application into the AKS cluster. After, we’ll set up traffic management policies such as retries and load balancing. Lastly, we’ll implement secure communication using mTLS.

By the end of the walkthrough, you’ll have a working example of Istio on AKS, along with insights into its capabilities and integration with Azure-native services.

About the Istio-based Service Mesh Add-On

Microsoft offers an Istio-based Service Mesh add-on for Azure Kubernetes Service (AKS) that simplifies the deployment and management of Istio. While it builds on the open-source Istio project, the add-on introduces several enhancements tailored for Azure users:

- Managed Lifecycle and Scaling: Microsoft handles the scaling and configuration of Istio control plane components, as well as scaling AKS resources such as CoreDNS when the Service Mesh is enabled.

- Version Compatibility: Istio versions are tested and verified to work seamlessly with supported AKS versions.

- Azure Integrations: The add-on works with Azure Monitor (including managed Prometheus) and Azure Managed Grafana for observability, and it is verified for internal and external ingress setups.

- Support: Azure provides official support for the add-on, unlike self-managed Istio installations.

However, there are limitations to keep in mind:

- The add-on cannot be used alongside AKS clusters with the Open Service Mesh add-on or self-managed Istio installations.

- Certain advanced Istio features, like egress gateways, multi-cluster support, and sidecar-less Ambient mode, are not yet supported.

- Customization through specific Istio custom resources (e.g., ProxyConfig, IstioOperator) is restricted. These differences make the Istio-based add-on an appealing choice for users who want a simplified, Azure-optimized experience, though it may not yet suit all advanced use cases.

Prerequisites

Before setting up the Service Mesh, ensure the following requirements are met:

- Azure Subscription with Owner Permissions: You’ll need an active Azure subscription with Owner permissions to create and manage resources in Azure Kubernetes Service (AKS).

- Azure CLI: Install Azure CLI (version 2.57.0 or later) for interacting with Azure services from the command line. You can install Azure CLI by following the instructions at Microsoft’s official documentation.

- Kubectl and Kubectl Login: Ensure kubectl is installed no your system, along with kubelogin to log in to Azure Kubernetes Service (AKS) using Entra ID credentials. You can install kubectl and kubelogin by following the instructions in this documentation.

- Visual Studio Code (optional): Have Visual Studio Code installed on your system for editing files and managing your project. You can download Visual Studio Code from here.

Set Up an AKS Cluster

If you don’t already have an AKS cluster, you’ll first need to create one. The following steps show how to set it up using Azure CLI.

Step 1: Log in to Azure by running the command below:

az loginThis will authenticate you to your Azure account. If you have multiple subscriptions, you can select the one you want to use by running the command below:

az account set --subscription "Your-Subscription-Name"Step 2: Create a resource group to organize your AKS resources by running the command below:

az group create --name myResourceGroup --location northeuropeStep 3: Create a new AKS cluster within the resource group by running the command below:

az aks create --resource-group myResourceGroup --name myAKSCluster --node-count 3 --enable-addons monitoring --generate-ssh-keysThis command creates an AKS cluster named myAKSCluster with 3 nodes and enables monitoring (for Azure Monitor). The –generate-ssh-keys option generates SSH keys if you don’t already have them.

Step 4: Once your AKS cluster is created, you need to configure your kubectl to use the cluster’s credentials. Run the commands below to do so:

az aks get-credentials --resource-group myResourceGroup --name myAKSClusterNow you can interact with the AKS cluster using kubectl.

Enable the Istio-Based Service Mesh Add-On

Now that we have our AKS cluster set up, we can enable the Istio-based Service Mesh add-on.

Step 5: To enable the Istio-based Service Mesh add-on, run the following command:

az aks mesh enable --resource-group myResourceGroup --name myAKSClusterThis command will install the Istio add-on, which simplifies the process of deploying Istio in your AKS cluster. Azure will handle the scaling and configuration of the Istio control plane.

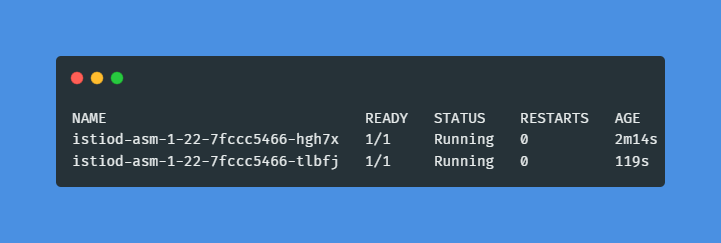

Step 6: Check the status of the Istio components using kubectl, by running the command below:

kubectl get pods -n aks-istio-systemYou should see the Istio pod has a status of Running, like the example below:

Deploy a Demo Application

With the Istio-based Service Mesh set up, it’s time to deploy a sample application that will use the Service Mesh features. We’ll deploy a simple microservices application to illustrate the Service Mesh’s capabilities.

For this demo, we’ll use a simple Bookinfo application that includes a frontend service, multiple backend services, and a database. This application is widely used to demonstrate Istio capabilities, which consists of the following services:

productpagedetailsreviewsratingsreviews(with multiple versions)

Step 7: Deploy the Bookinfo application by running the command below:

kubectl apply -f https://raw.githubusercontent.com/istio/istio/master/samples/bookinfo/platform/kube/bookinfo.yamlStep 8: To check if the application has been successfully deployed, use the command below:

kubectl get servicesYou should see the services like productpage, reviews, and ratings running in your cluster.

Configure Key Features of the Service Mesh

Now, let’s configure some of the core features of the Service Mesh to enhance traffic management and security.

Step 9: We’ll configure Istio’s traffic management capabilities to control the flow of requests between services. We’ll create a VirtualService and a DestinationRule to control traffic routing between different versions of the reviews service.

Create a reviews-traffic.yaml file, like below:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

spec:

hosts:

- reviews

http:

- route:

- destination:

host: reviews

subset: v1

weight: 80

- destination:

host: reviews

subset: v2

weight: 20

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews

spec:

host: reviews

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2Step 10: Apply this configuration by running the command below:

kubectl apply -f reviews-traffic.yamlYou can verify that the traffic distribution is working by accessing the productpage service and observing the different versions of the reviews service being called.

Step 11: To ensure secure communication between services, we’ll enable mutual TLS (mTLS), which automatically encrypts traffic between services in the mesh. You can enforce mTLS by creating a PeerAuthentication resource for the default namespace, by running the command below:

kubectl apply -f - <<EOF

apiVersion: authentication.istio.io/v1alpha1

kind: PeerAuthentication

metadata:

name: default

namespace: default

spec:

mtls:

mode: STRICT

EOFThis will require all services in the default namespace to communicate using mTLS. Once mTLS is enabled, all communication between the services will be encrypted, ensuring confidentiality and integrity. You can verify the mTLS status by checking the communication policies in Istio.

Test the Service Mesh

Now that we’ve deployed the demo application and configured key Service Mesh features, it’s time to test the setup.

Step 12: Use the following command to retrieve the external IP address of the Istio ingress gateway:

Open your browser and navigate to http://<EXTERNAL-IP>/productpage, replacing <EXTERNAL-IP> with the IP address you retrieved in your terminal.

Step 13: You can also check the logs of the productpage service to verify the versions of the reviews service it is contacting. Get the Pod name for productpage by running the command below:

kubectl get pods -l app=productpageStep 14: Use the pod name to view logs, by running the command below:

kubectl logs <productpage-pod-name>Look for requests routed to reviews and observe the version being contacted (e.g., reviews-v1, reviews-v2).

By using the browser interface, distributed tracing tools, or application logs, you can confirm that traffic is being routed as specified in your VirtualService configuration.

Clean Up

We’ll now delete all resources after completing this walkthrough, to ensure that you avoid unnecessary costs.

Step 15: Run the command below to delete the resource group and all resources within this resource group entirely:

az group delete --name myResourceGroup --yes --no-waitStep 16: To ensure the resources are deleted, you can list all active resource groups, by running the command below:

az group list --query "[].name"The resource group should no longer appear in the list.

Step 17: Make sure you delete downloaded credentials (kubeconfig file) by running the command below:

rm -rf ~/.kube/configYour environment is now cleaned up, and you won’t incur any further charges.

Closing Words

We’ve explored how a Service Mesh, specifically Istio on Azure Kubernetes Service (AKS), enhances the management of microservices communication. A Service Mesh simplifies traffic management, enforces security policies like mTLS, and provides deep observability and traffic control. By abstracting the complexity of service-to-service communication, it empowers developers and DevOps professionals to focus on application logic rather than infrastructure.

However, as we’ve seen, implementing a Service Mesh comes with its own set of challenges, such as added complexity and resource overhead. These are important considerations before introducing a Service Mesh into your architecture. Nevertheless, for large-scale, distributed systems with multiple microservices, the benefits—especially in terms of security, observability, and traffic control—far outweigh the drawbacks.

The best way to understand and leverage a Service Mesh is by getting hands-on experience with it. To learn more about and to continue your journey with Service Mesh and Istio on AKS, you can start with reading some of the resources below:

Thank you for taking the time to go through this post and making it to the end. Stay tuned, because we’ll keep continuing providing more content on topics like this in the future.

Author: Rolf Schutten

Posted on: December 22, 2024